...and a peak level of 153% (impossible) in the tag.

It's impossible only with integer bit depth lossless.

Decoding lossy files tends to create increased peaks that go over 100% if the floating point output is not truncated. This can happen especially with modern loud and compressed files (i.e. victims of the loudness war). E.g. foobar and Winamp measure the decoded floating point PCM content and can produce such values. The pronounced peaks can be preserved by decoding to float and reducing the volume with Replay Gain before converting to integer bit depth.

Previously MC decoded to 16-bit and truncated the peaks, but I never bothered to raise an issue of that. It really doesn't matter if you preserve or truncate some peaks that have a duration of a few samples (often only one or two samples). No one can hear the difference and there is no way know which behavior actually produces output that is closer to the original.

However, when the new floating point MP3 decoder was introduced I was a bit surprised that MC didn't start to measure peak values that go over 100%, but perhaps the limitation is in the Replay Gain analyzer code.

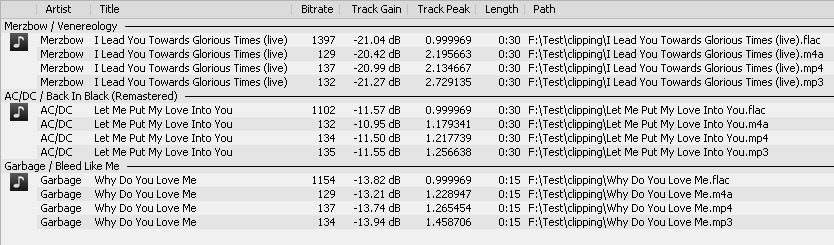

I once posted some "loud" samples to Hydrogen Audio that create pronounced peaks when encoded to lossy and decoded to float:

http://www.hydrogenaudio.org/forums/index.php?showtopic=78476 (you can download the samples from the linked thread.)

One of the samples produces a peak value of 273% when it is encoded to MP3 (LAME -V5) and measured with foobar:

Here is a link to my other HA post, in which I explain how I experimented with trying to make the distorded "lossy" peaks audible:

http://www.hydrogenaudio.org/forums/index.php?s=&showtopic=82112&view=findpost&p=713723

Author

Topic: Media Center doesn't read the <RATING> tag field ? (Read 7380 times)

Author

Topic: Media Center doesn't read the <RATING> tag field ? (Read 7380 times)